I can't find this information anywhere on the net, so I'll put it here. I've always been fascinated by the various alternatives to pure deferred shading, so I was really excited when I first encountered a light indexed variant.

Backstory: Halo Wars

I tired of the challenges inherent in pure deferred rendering approaches by around 2005, so I purposely used several deferred rendering alternatives on Halo Wars. HW combined X360 specific multi-light forward lighting for precise lighting (or for lights that required dynamic shadowing and/or cookies), along with an approximate voxel-based method for much cheaper, lower priority "bulk" lights.

In the voxel based approximate approach, one volume texture contained the total incoming light at each voxel's center (irradiance), and the other contained the weighted average of all the light vectors from all the lights that influenced each voxel (with the total weight in alpha). From this data, it was possible to compute approximate exit radiances at any point/normal. This "voxel light buffering" system was very efficient and scalable, so it was also used to illuminate terrain chunks and meshes by most omni/spot lights in the game. As an added bonus, it was easy and cheap to illuminate particles using this approach too.

We couldn't use multi-light forward on everything because the GPU fillrate cost from each added light was too high, and our object vs. light overlap check (just simple bounding box vs. sphere or cone) was very rough. Especially on terrain chunks, the extra pixel cost was brutal.

I had the GPU update the voxel textures each frame. Lights became as cheap as super low-res sprites, so we could have hundreds of them. The voxel texture's bounds in worldspace always covered the terrain in the view frustum (up to a certain depth and world height). The resolution was either 64x64x16 or 128x128x16 (from memory).

I found this approach interesting because it could achieve both diffuse and specular lighting, and because it visually degraded well when more than one light hit a voxel. Anyhow, after Ensemble collapsed I put all this stuff into the back of my mind.

I found this approach interesting because it could achieve both diffuse and specular lighting, and because it visually degraded well when more than one light hit a voxel. Anyhow, after Ensemble collapsed I put all this stuff into the back of my mind.

Source 2

When I first came to Valve after HW shipped (late 2009), I kept encouraging the graphics programmers to look for another viable alternative to deferred rendering. One day, in early to middle 2010, I came in to work and Shanon Drone showed me his amazing prototype implementation of a voxel based forward rendering variant of what is known as Light Indexed Deferred Lighting. (So what would the name be? Voxed Based Light Indexed Forward Lighting?)

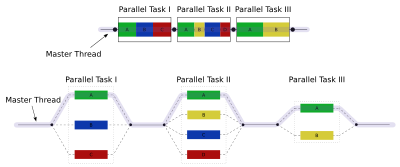

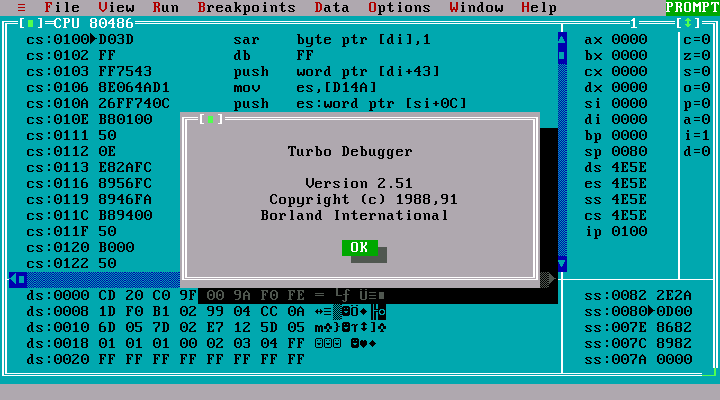

From what I remember, his approach voxelized in projection space. Each voxel contained an integer index into a list of lights that overlapped with that voxel's worldspace bounding box. The light lists were stored in a separate texture. The CPU (using SIMD instructions) was used to update the voxel texture, and the CPU also filled in the lightlist texture.

The scene was rendered just like with forward lighting, except the pixel shader fetched from the voxel light index texture, and then iterated over all the lights using a dynamic loop. The light parameters were fetched from the lightlist texture. I don't remember where the # of lights per voxel was stored, it could have been in either texture.

From what I remember, his approach voxelized in projection space. Each voxel contained an integer index into a list of lights that overlapped with that voxel's worldspace bounding box. The light lists were stored in a separate texture. The CPU (using SIMD instructions) was used to update the voxel texture, and the CPU also filled in the lightlist texture.

The scene was rendered just like with forward lighting, except the pixel shader fetched from the voxel light index texture, and then iterated over all the lights using a dynamic loop. The light parameters were fetched from the lightlist texture. I don't remember where the # of lights per voxel was stored, it could have been in either texture.

The new approach was ported and tested on X360, and I remember we switched to packing the light indices (or something - it's been a long time!) into an array of constant registers to make the approach practical at all on X360.

I have no idea if this is published anywhere, but I remember the day very clearly as an internal breakthrough. It's been many years now, so I doubt anyone will mind me mentioning it.

Published material on light indexed deferred/forward rendering techniques (or similar variants):

I'll update this blog post as I run across links:

Technical Paper on Light Indexed Deferred Lighting - Dec. 2007

Research into light indexed deferred rendering (Google Code)

Research into light indexed deferred rendering (github)

Practical Clustered Shadowing - Avalanche’s solution in Just Cause 3

Tiled Forward Shading Links

Forward vs Deferred vs Forward+ Rendering with DirectX 11 - by Jeremiah van Oosten

Research into light indexed deferred rendering (github)

Practical Clustered Shadowing - Avalanche’s solution in Just Cause 3

Tiled Forward Shading Links

Forward vs Deferred vs Forward+ Rendering with DirectX 11 - by Jeremiah van Oosten

Misc: